That cat is thoroughly out of the bag. In just the last three weeks there's been three different ChatGPT competitors launched in China. Facebook's Llama model is available to anyone with the hardware to run it - people have got it running on a Raspberry Pi. No one is going to pause their research. In the words of Vernor Vinge:

But if the technological Singularity can happen, it will. Even if all the governments of the world were to understand the "threat" and be in deadly fear of it, progress toward the goal would continue. The competitive advantage -- economic, military, even artistic -- of every advance in automation is so compelling that forbidding such things merely assures that someone else will get them first.

https://frc.ri.cmu.edu/~hpm/book98/com. ... arity.htmlHis whole article on the technological singularity (or Bingularity as some people are calling it) is well worth a read, even at thirty years old his predictions are impressive (also, if you want a look at the "blueprint" a lot of the people pushing Augmented Reality is working from you should read his novel Rainbow's End).

And some people genuinely are in deadly fear of the singularity:

AI Theorist Says Nuclear War Preferable to Developing Advanced AIhttps://www.vice.com/en/article/ak3dkj/ ... dvanced-aiThat would be the same theorist who freaked the fuck out when the Roko's Basilisk thought experiment was posted on LessWrong.

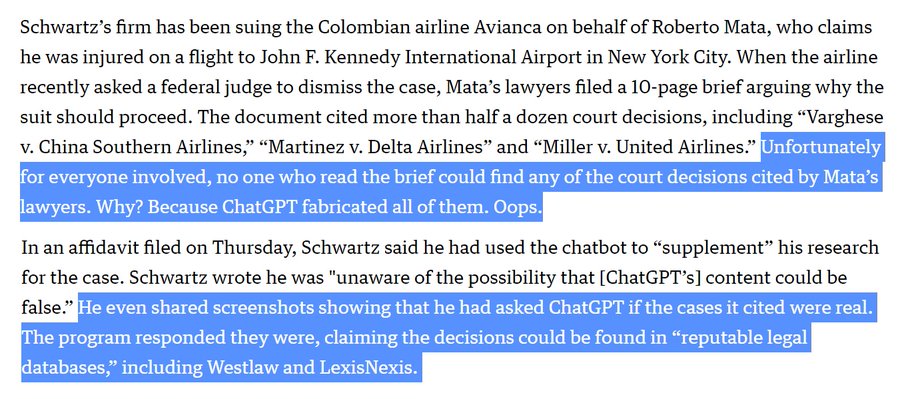

It's also pretty ironic that Elon Musk signed that letter when he's one of the co-founders of OpenAI, the people behind ChatGPT. To be fair, he did warn about summoning the demon, but the problem isn't really that we're summoning a demon, it's that we don't have any fucking idea what we're summoning. Could be nothing, an angel, a demon, a trickster god or some dude named Bob with questionable beliefs on race.

And that I think is why so many people are freaking out about it: no one seems to have a clue exactly where things are heading. We could be right at the point of some plateau with the tech leveling off and becoming just another everyday thing we take for granted with specific use cases, like online chat or search, or we could still be at the very start of things.

A year ago I wouldn't have thought it possible that I could be running Stable Diffusion on my own computer right now. Six months ago I didn't think it would be possible for me to run what is essentially the Star Trek computer on my own computer right now, and three months ago I didn't think it would be possible for me to run text to video on my own computer right now. I genuinely don't have a fucking clue what will be possible in a year, let alone five years, and I suspect that same ignorance applies to most of the people actually making this stuff. Everyone has been caught off guard by how fast things are moving.

"I only read American. I want my fantasy pure." - Dave